Some cute round trip test tricks

In the last post, we looked at layering our deserialization code to keep things simple. This time, we’ll enjoy the delightful testing benefits this effort yields.

Round trip tests

We can do a round trip test whenever we pair an interface with some IO in the following fashion:

interface Service

{

void onThing(Thing thing);

}

void bind(final InputStream input, final Service service)

{

// This will deserialize method calls from the input and invoke

// them on the 'real' implementation that's been passed in

// We'll call this the receiver

}

Service bindToRemote(final Outputstream output)

{

// This will create an implementation that serializes the calls it receives

// onto the output stream. Presumably, a bound input sits somewhere at the other end.

// We'll call this the transmitter

}

This approach can turn any an interface that only passes information from caller to callee i.e tell don’t ask like you mean it, also known as a messaging contract, relatively easily. The symmetry of the arrangement is its power; whatever we call on the transmitter should (eventually) be called on the receiver.

N.B Inevitably at some point, I’m going to stop referring to method calls and start talking instead about messages. For me, method calls on messaging contracts === messages.

Let’s look at some concrete examples based on the two serialization problems we looked at last time.

Back to JsonElement et al

Reminder: our wire protocol has to take function calls on the following interface:

public interface AgentToServerApplicationProtocol

{

void reportAgentPhase(AgentPhase agentPhase);

void reportAgentStatus(AgentStatus status);

void reportCommandResult(long correlationId, LocalCommandResult<JsonElement> result);

}

…and serialize them over a websocket connection.

Here are (some of) the classes we’ll be using. The ones under test are AgentToServerProxy and AgentToServerProtocolReceiver. As a pair, they provide a transparent interface to transport function calls between one physical host and another.

public interface Sender

{

void send(final Consumer<OutputStream> sendee);

}

public class AgentToServerProxy implements AgentToServerApplicationProtocol

{

private final Sender sender;

public AgentToServerProxy(final Sender sender)

{

this.sender = sender;

}

// ... each method is json serialized, and we call

// ... the sender appropriately to do so, like this:

@Override

public void reportAgentPhase(final AgentPhase agentPhase)

{

final String jsonMessage = toJson("reportAgentPhase", agentPhase);

sendJson(jsonMessage);

}

private void sendJson(final String json)

{

sender.send(stream ->

{

try

{

stream.write(json.getBytes());

}

catch (final IOException ioe)

{

throw new UncheckedIOException(ioe);

}

});

}

}

public class AgentToServerProtocolReceiver

{

private final AgentToServerApplicationProtocol target;

void dispatch(final InputStream stream)

{

// ... parses the json off the stream, and invokes the appropriate method on target...

}

}

…and here’s our test code

private final AgentToServerApplicationProtocol application =

mockery.mock(AgentToServerApplicationProtocol.class);

private final AgentToServerProtocolReceiver agentToServerProtocolReceiver =

new AgentToServerProtocolReceiver(application);

private final AgentToServerProtocolSender sender = new AgentToServerProtocolSender(sendee ->

{

final ByteArrayOutputStream baos = new ByteArrayOutputStream(1024);

sendee.accept(baos);

final ByteArrayInputStream bais = new ByteArrayInputStream(baos.toByteArray());

agentToServerProtocolReceiver.dispatch(bais);

});

@Test

public void roundTripReportAgentPhase()

{

mockery.checking(new Expectations()

{{

application.reportAgentPhase(AgentPhase.FAILED);

}});

sender.reportAgentPhase(AgentPhase.FAILED);

}

Here, the ‘message’ of reportAgentPhase is serialized and deserialized in the same test. We assert that the method is appropriately called on the mocked target. N.B All of our method parameters need to override equals() correctly for this to work. Bring me data classes, java 10!

What’s particularly nice about this construction is that generalizing the test to multiple ‘messages’ is delightfully simple. We can write a method like the following:

private void assertApplicationRoundTrip(final Consumer<AgentToServerApplicationProtocol> consumer)

{

mockery.checking(new Expectations()

{{

consumer.accept(oneOf(application));

}});

consumer.accept(sender);

}

and all our tests now just look like

@Test

public void roundTripReportAgentPhase()

{

assertApplicationRoundTrip(application -> application.reportAgentPhase(AgentPhase.FAILED));

}

We can even go further than this. In our particular scenario here, we know that our InputStream always contains only a single message. In more challenging serialization environments, we could be called with a fragment, or a stream containing multiple RPCs. Can we test for those cases as well?

Example 2: Reducto

Yes we can. Never ask a rhetorical question that you do not know the answer to.

In our first example, we live in the happy world of websockets, where fragmentation is a long forgotten nightmare, and batching is something that happens to baked goods.

We don’t have the same fortune in reducto’s RPC layer. We kindly ask netty to connect agent and server via TCP, and it very effectively does so. We then rudely shove arbitrarily large blobs of binary at those connections (a single rpc could span several packets).

This means that we might not have as much symmetry as we thought:

This is what the transmitter might do

receive method invocation at index 1 with args a and b

send 1 + serialize(a) + serialize(b) to the connection

receive method invocation at index 1 with args c and d

send 1 + serialize(c) + serialize(d) to the connection

receive method invocation at index 2 with no args

send 2 to the connection

Now, depending on the size of a, b, c and d, the receiver might get:

1. a single packet containing all of these invocations

2. a packet containing the index 1, and the first few bytes of a

a packet containing the middle few bytes of a

...and so on...

a packet containing the end of d, and finally, 2, to indicate the last call

3. ...

4. Loss. Deep, deep, loss.

We still want to guarantee that the real receiver’s inner implementation gets those same messages in the same order, despite whatever batching and fragmentation occurs on the wire.

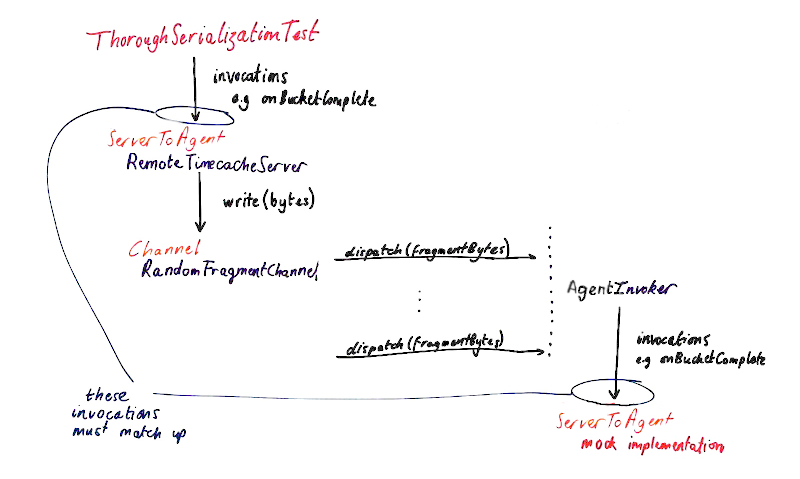

This doesn’t just need a round trip test. It needs a round trip fuzz test.

A round trip test with fuzzy batching

We can use much of the same equipment as we did previously we invoke methods on our transmitter, and, at the end, verify that our receiver has had those same messages invoked on it, in the same order (although, given the nature of TCP, the ordering is perhaps less important).

The only difference is that we are going to reach into the passthrough byte streams, and chunk them arbitrarily (randomly, in fact).

We’ll look at the server to agent communication in reducto, because the interface is smaller.

import java.nio.ByteBuffer;

import java.time.ZonedDateTime;

import java.util.Optional;

public interface ServerToAgent {

void installDefinitions(String className);

void populateBucket(

String cacheName,

long currentBucketStart,

long currentBucketEnd);

void iterate(

String cacheName,

long iterationKey,

ZonedDateTime from,

ZonedDateTime toExclusive,

String installingClass,

String definitionName,

Optional<ByteBuffer> wireFilterArgs);

void defineCache(

String cacheName,

String cacheComponentFactoryClass);

}

We’ll also need the following interfaces:

public interface Channel

{

void write(ByteBuf buffer);

ByteBuf alloc(int messageLength);

}

interface FlushableChannel extends Channel

{

void flush();

}

interface Invocation<T>

{

void run(final T t);

}

…and then our implementation looks as follows:

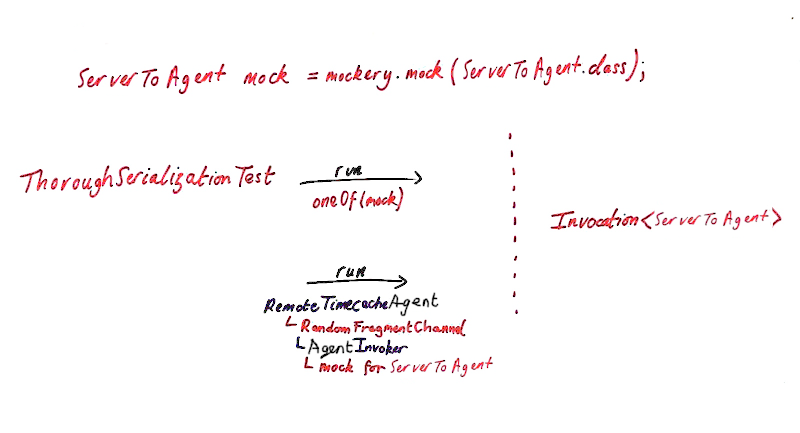

static <T> void runTest(

final Mockery mockery,

final Class<T> target,

final Function<T, Consumer<ByteBuf>> invokerFactory,

final Function<Channel, T> stubCreator,

final List<Invocation<T>> possibleInvocations

)

{

final Random random = new Random(System.currentTimeMillis());

final T mock = mockery.mock(target, "thorough serialization target");

final Consumer<ByteBuf> invoker = invokerFactory.apply(mock);

runWith(mockery, stubCreator, possibleInvocations, random, mock, new RandomFragmentChannel(random, invoker));

}

private static <T> void runWith(

final Mockery mockery,

final Function<Channel, T> stubCreator,

final List<Invocation<T>> possibleInvocations,

final Random random,

final T mock,

final FlushableChannel channel)

{

final T stub = stubCreator.apply(channel);

final List<Invocation<T>> invocations = generateList(

random, r -> possibleInvocations.get(r.nextInt(possibleInvocations.size())));

for (Invocation<T> invocation : invocations)

{

mockery.checking(

new Expectations()

{{

invocation.run(oneOf(mock));

}}

);

invocation.run(stub);

}

channel.flush();

}

The generic, T represents the interface we are attempting to remotely call. The stubCreator is misnamed it actually creates the transmitter or proxy side. The invokerFactory creates the receiving end: it can wrap a given an instance of T, parsing messages from passed in ByteBufs.

We foolishly seed our Random with the current time. The magic is mostly in RandomFragmentChannel so we’d best have a good look at it.

private static class RandomFragmentChannel implements Channel, FlushableChannel

{

private final ByteBufAllocator allocator;

private final Random random;

private final Consumer<ByteBuf> invoker;

RandomFragmentChannel(Random random, Consumer<ByteBuf> invoker)

{

this.random = random;

this.invoker = invoker;

this.allocator = new UnpooledByteBufAllocator(false, true);

}

@Override

public void write(ByteBuf buffer)

{

int delivered = 0;

int toDeliver = buffer.readableBytes();

while (delivered < toDeliver)

{

int remaining = toDeliver - delivered;

int bufSize = 1 + random.nextInt(remaining);

ByteBuf actual = allocator.buffer(bufSize);

buffer.readBytes(actual);

invoker.accept(actual);

delivered += bufSize;

}

}

@Override

public ByteBuf alloc(int messageLength)

{

return allocator.buffer(messageLength);

}

@Override

public void flush()

{

}

}

Looking at this closely, we can see that this class is quite well named. It only fragments; it never batches. Each buffer passed to it is delivered to the invoker within the same call; it may just end up being torn into several pieces before that happens.

We could go further, and equip our fragmenter with a buffer of its own, so it can both fragment and batch randomly. I…am not entirely sure why I did not do that at the time. Instead what seems to have happened is this: I added a differently behaving channel, and ran the fuzz test with both, as follows:

static <T> void runTest(

final Mockery mockery,

final Class<T> target,

final Function<T, Consumer<ByteBuf>> invokerFactory,

final Function<Channel, T> stubCreator,

final List<Invocation<T>> possibleInvocations

)

{

final Random random = new Random(System.currentTimeMillis());

final T mock = mockery.mock(target, "thorough serialization target");

final Consumer<ByteBuf> invoker = invokerFactory.apply(mock);

runWith(mockery, stubCreator, possibleInvocations, random, mock, new RandomFragmentChannel(random, invoker));

runWith(mockery, stubCreator, possibleInvocations, random, mock, new OneGiantMessageChannel(invoker));

// ^^^^^^^ this was mysteriously omitted earlier

}

private static class OneGiantMessageChannel implements Channel, FlushableChannel

{

private final Consumer<ByteBuf> invoker;

private final UnpooledByteBufAllocator allocator;

private ByteBuf buffer;

OneGiantMessageChannel(Consumer<ByteBuf> invoker)

{

this.invoker = invoker;

this.allocator = new UnpooledByteBufAllocator(false, true);

this.buffer = this.allocator.buffer(128);

}

@Override

public void write(final ByteBuf inboundBuffer)

{

if (this.buffer.writableBytes() < inboundBuffer.readableBytes())

{

final ByteBuf newBuf = allocator.buffer(this.buffer.capacity() * 2);

this.buffer.readBytes(newBuf, this.buffer.readableBytes());

this.buffer = newBuf;

write(inboundBuffer);

}

else

{

inboundBuffer.readBytes(this.buffer, inboundBuffer.readableBytes());

}

}

@Override

public ByteBuf alloc(int messageLength)

{

return allocator.buffer(messageLength);

}

@Override

public void flush()

{

invoker.accept(buffer);

}

}

An aside: I particularly like class names that start with One, because it immediately reminds me of old Radio 1 soundbytes where they were trying to advertise “One Big Sunday”; a universally awful music event, with a really good marketing team. The soundbyte mostly consisted of someone saying “One Big Sunday” in a rhythmically pleasing way. I can hear the class name “One Giant Message Channel” in that same voice.

Anyway. At some point, I should probably go and write the one true RandomFragmentingBatchingChannel implementation, and simplify this. Or there might be value in keeping all three.

Are we done? Not quite. We could do even better.

Even more randomness

Up until now, we’ve just been given a list of possible Invocations and then boldly claimed that, as they pass through serialization unharmed, despite batching or fragmentation, everything must be ok.

What does the call site of our tests look like? Have we covered the full space of Invocations?

private static final List<Invocation<ServerToAgent>> ALL_POSSIBLE_INVOCATIONS =

Arrays.asList(

agent -> agent.defineCache("foo", "bar"),

agent -> agent.installDefinitions("foo"),

agent -> agent.iterate(

"foo",

252252L,

ZonedDateTime.ofInstant(Instant.ofEpochMilli(645646L), ZoneId.of("UTC")),

ZonedDateTime.ofInstant(Instant.ofEpochMilli(54964797289L), ZoneId.of("UTC")),

"bar",

"baz",

Optional.empty()),

agent -> agent.populateBucket("foo", 23298L, 2352L)

);

We have not. We could tweak the definition of runTest to take Function<Random, <List<Invocation<T>>> instead of List<Invocation<T>> for even more fuzzing power.

static <T> void runTest(

final Mockery mockery,

final Class<T> target,

final Function<T, Consumer<ByteBuf>> invokerFactory,

final Function<Channel, T> stubCreator,

final Function<Random, List<Invocation<T>>> possibleInvocations

)

{

final Random random = new Random(System.currentTimeMillis());

final T mock = mockery.mock(target, "thorough serialization target");

final Consumer<ByteBuf> invoker = invokerFactory.apply(mock);

runWith(mockery, stubCreator, possibleInvocations.apply(random), random, mock, new RandomFragmentChannel(random, invoker));

}

That should find plenty of bugs, assuming we are capable of writing a sufficiently expressive function to generate those invocations.

Conclusion

We’ve put together a some fuzz tests for two serialization layers.

We’ve managed to avoid talking about the other name for this technique: Property Based Testing. What we’ve tried to do is prove the ‘symmetric rpc delivery’ property about both of our serialization libraries using a fuzz mechanism.

We could have picked one the QuickChecks and hypothesises (hypotheses?) of this world to help us out. Why? Well, they have features that our tiny framework here does not have; notably:

- They are capable of taking a randomly generated input that causes failure, and ‘shrinking’ it to a minimal failing example.

- They can much more easily tune precisely how many runs each particular test should get

- The choice of seed (and, later, its exposure to facilitate debugging) is more sensibly handled.

These frameworks might make the job of writing (and maintaining) these sorts of tests simpler; we mention them here mostly for completeness we didn’t end up needing their power in either of our examples. I suspect that moving to randomly generated Invocations in the second case would be a good point to switch, however.

Finally, some food for thought. Up until now, I would have offered the following statement about the relationship between testability and software:

High quality software easily admits testing.

I should probably reduce that to

All the high quality software I have seen has foundational commitment to testability.

but let’s go with the slightly bolder version, so we can ask the following:

Would it be even better to say:

Higher quality software easily admits property based tests

?